另外,CFDEM如何实现壁面变形呢,像下面这样逐渐撑开流动通道

小刘lyw

帖子

-

CFDEM能否实现模拟在流体作用下有厚度的壁面动态开裂呢 -

分享CFDEM+OpenFOAM+LIGGGHTS初次编译安装过程@capillaryFix 好嘞好嘞,谢谢老师

-

分享CFDEM+OpenFOAM+LIGGGHTS初次编译安装过程@李东岳 好嘞老师,谢谢!

-

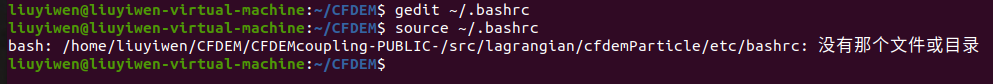

分享CFDEM+OpenFOAM+LIGGGHTS初次编译安装过程@true 老师您好,我在进行这一步“7. 配置CFDEM环境变量和路径”的时候提示

请问可能是什么原因导致的呢 -

如何用Paraview监测出口颗粒速度 -

如何用Paraview监测出口颗粒速度@YHY 哈哈我也是刚接触不久,有问题可以随时交流呀

-

CFDEM运行时中断signal 9 (Killed)@李东岳 多谢老师解惑!

-

CFDEM运行时中断signal 9 (Killed)@李东岳 老师您好,该模拟大概每秒注入七百万颗粒,确实比较大,请问有没有什么解决方法呢,降低杨氏模量、增加模拟使用的节点或者取消颗粒间相互作用力是否有帮助呢

-

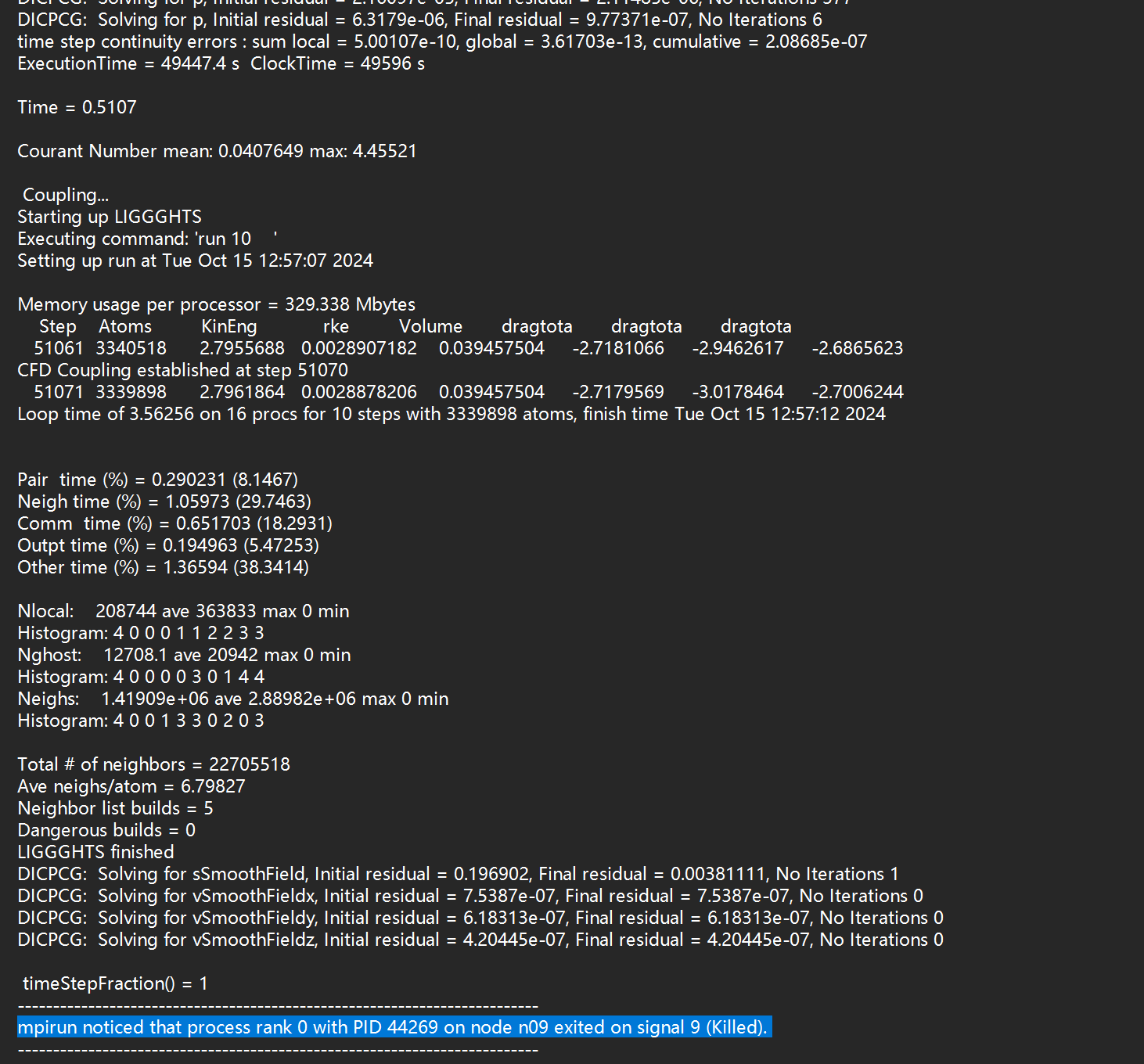

CFDEM运行时中断signal 9 (Killed)模拟时出现中断,但并未有错误输出,只出现了以下提示

mpirun noticed that process rank 0 with PID 44269 on node n09 exited on signal 9 (Killed).

请问各位老师又没有遇到类似的问题呢 -

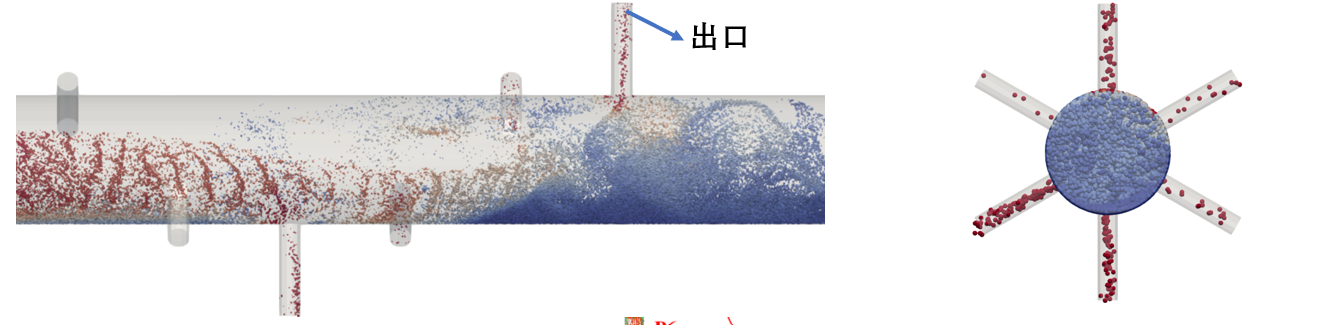

如何用Paraview监测出口颗粒速度

各位老师好,我近期在做支撑剂运移模拟,效果是这样的,模型的六个分支端部作为出口,我想要采用Paraview后处理,提取六个出口处的颗粒速度

我尝试采用这篇文章里的方法,结合出口STL文件进行颗粒速度映射提取,但效果不好

https://blog.csdn.net/qq_22182289/article/details/117090868

请问各位大佬有没有推荐使用的filter呢,或者有没有可能在liggghts文件中添加代码实现这个功能呢 -

OpenFOAM v2012 waves2foam 造波问题@bike-北辰 不知道是不是我的错觉,您的分号是否是用了中文输入呢

-

Paraview使用Glyph Sphere后处理颗粒时出现闪退各位老师好,我使用paraview5.9.1进行颗粒的后处理,vtk文件大约900MB,使用Glyph Arrow时可以正常运行,但当换成Sphere后出现无响应,弹窗情况,如下

无报错提示,随后闪退,查看系统事件簿出现如下错误提示

请问各位老师这是什么原因呢 -

自适应网格库朗数增长迅速导致代码中断@李东岳 CFDEM采用的是IB求解器,CFD计算采用了pisoFoam求解器

-

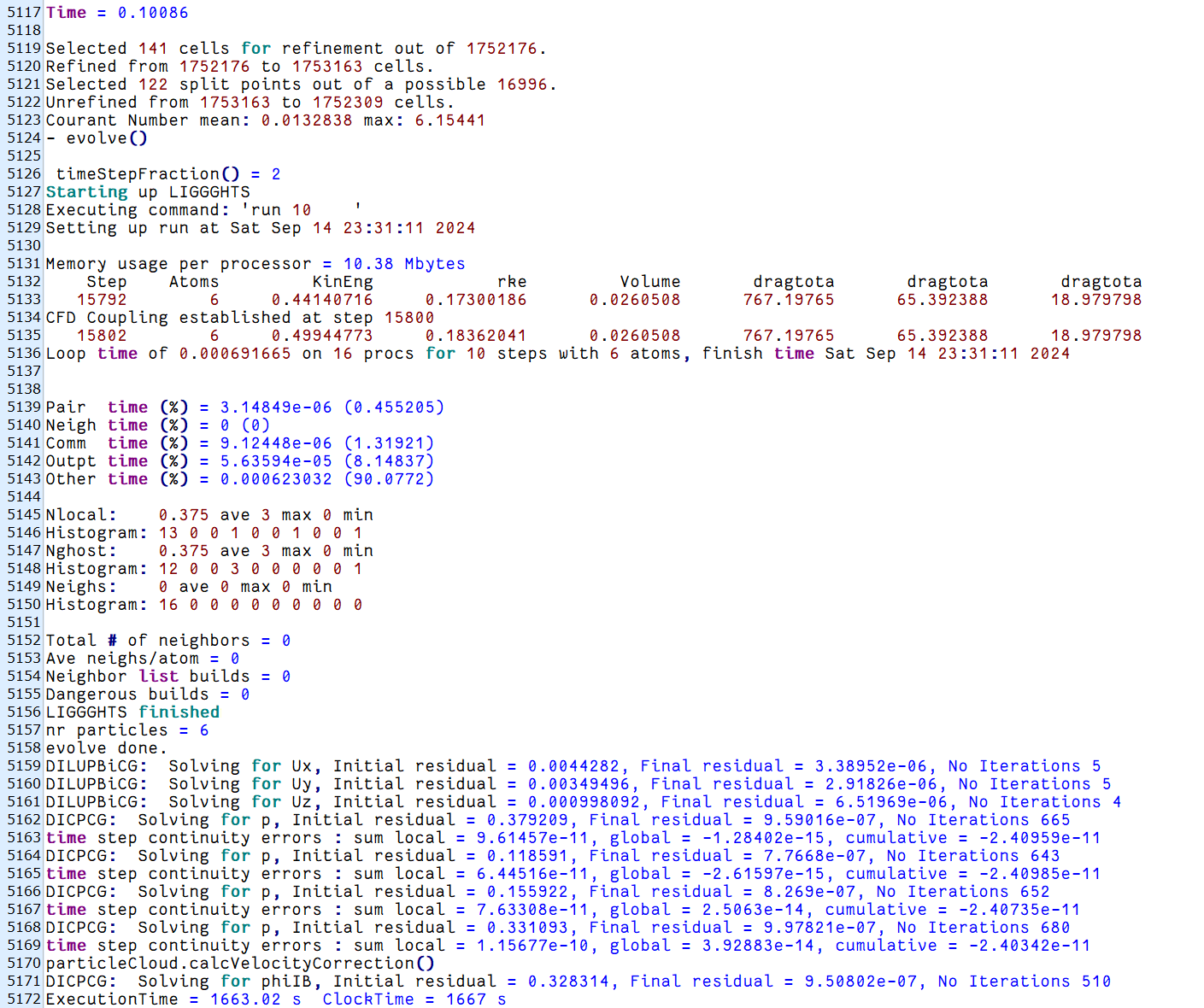

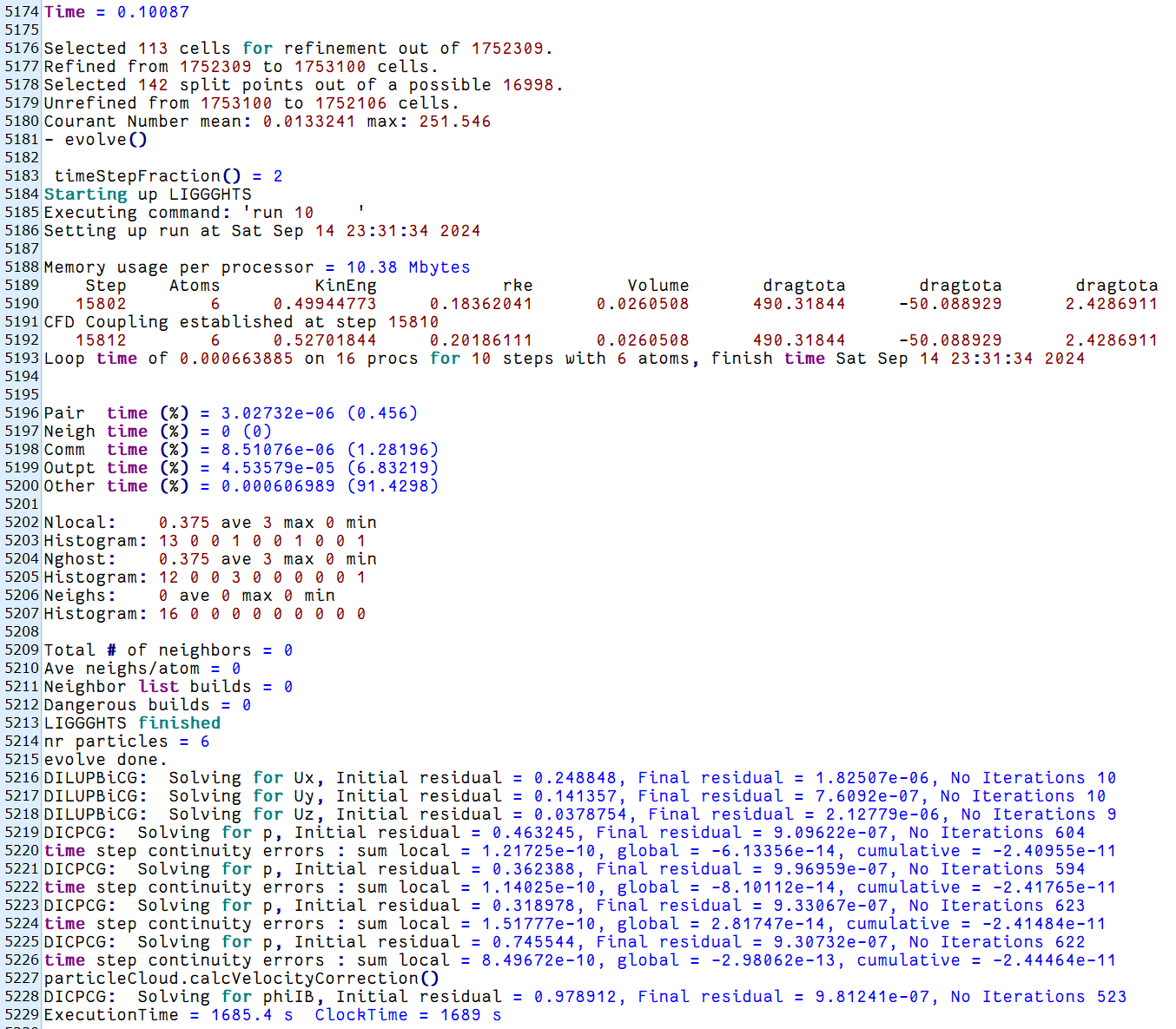

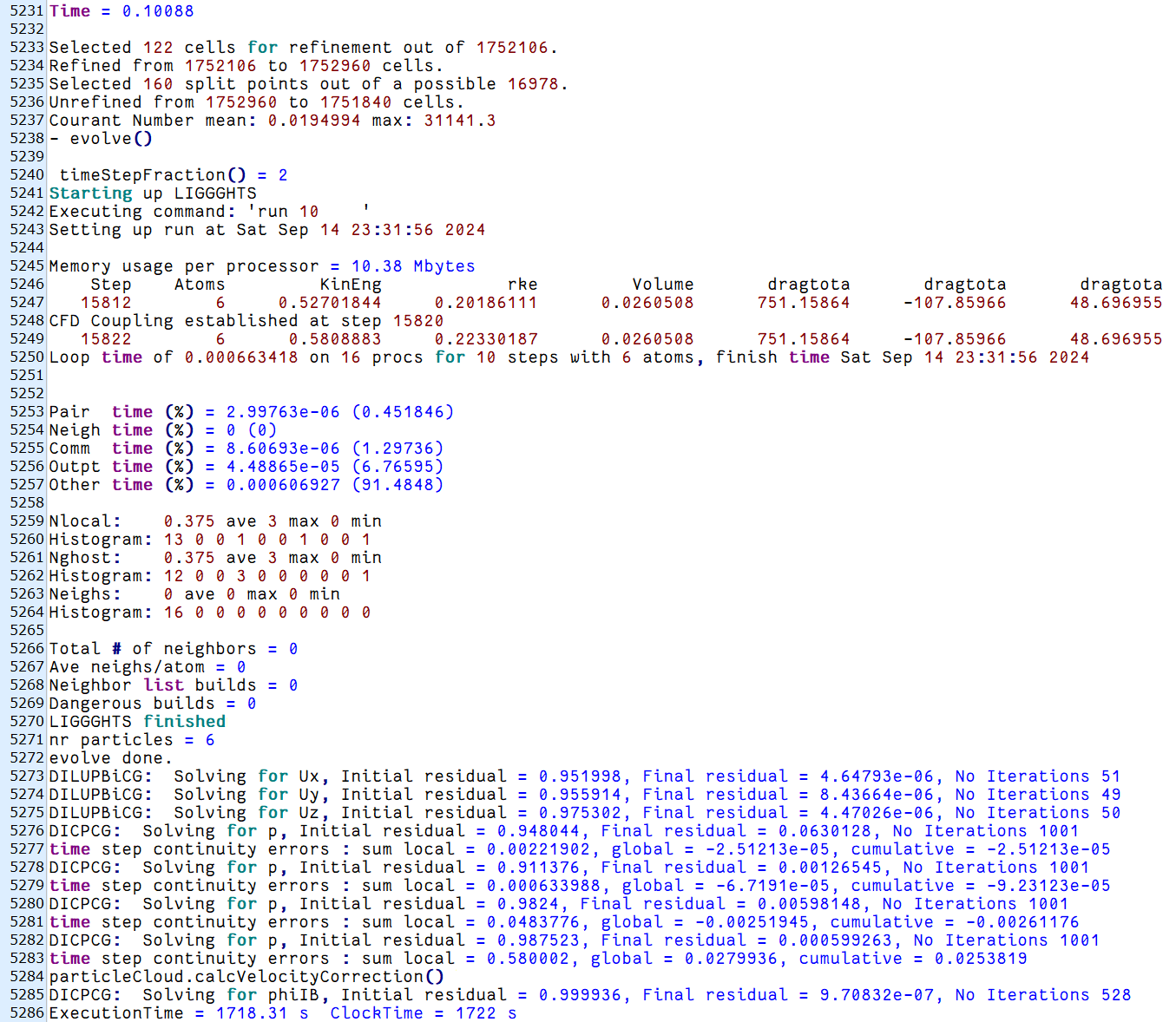

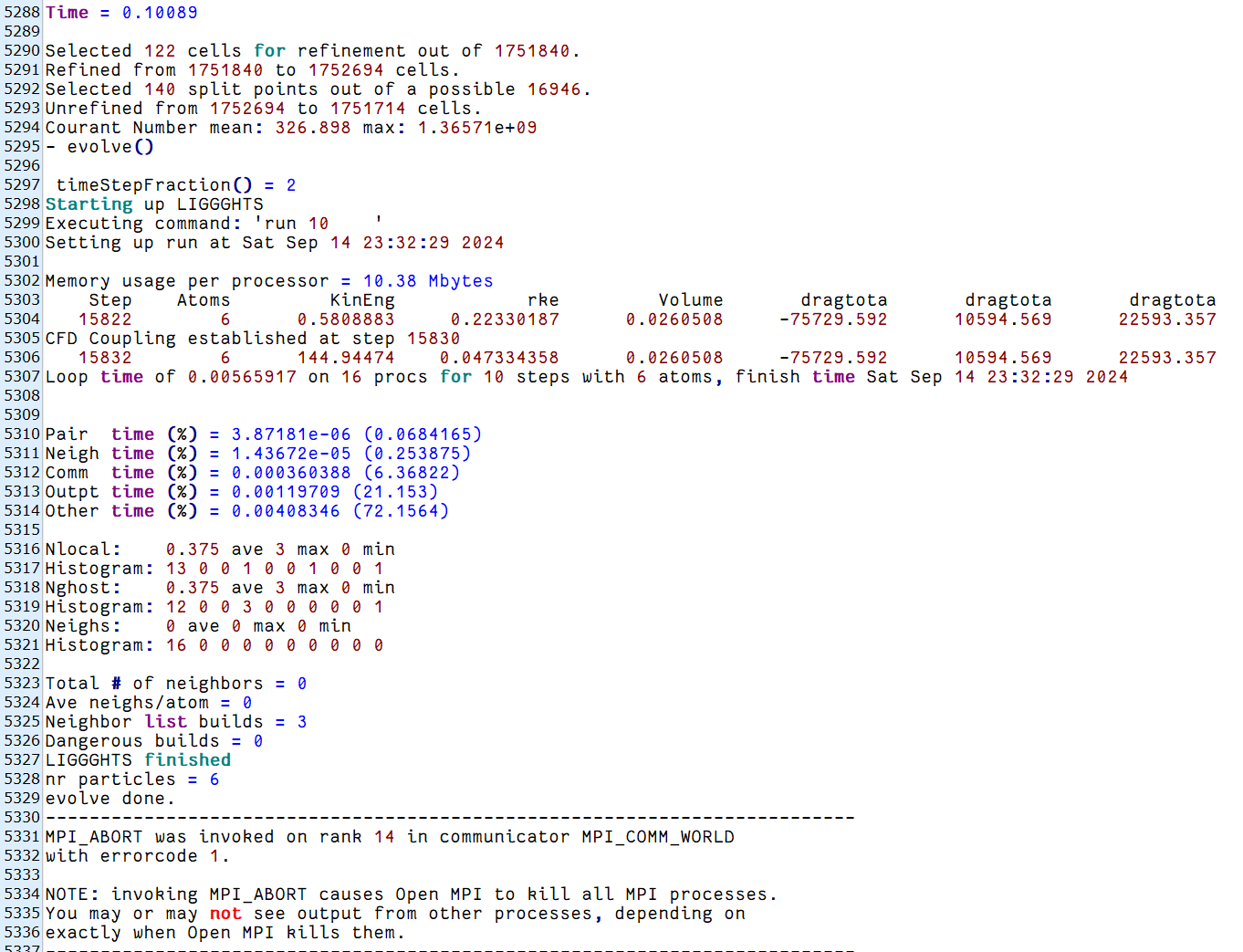

自适应网格库朗数增长迅速导致代码中断各位老师好,目前我在用自适应网格进行球体在井筒中下落的数值模拟,但发现模拟过程出现中断,中断时间步出现库朗数指数级增长。

尝试减小时间步,运行时间长了一点,但仍出现库朗数指数级增长现象,请问各位老师出现这种情况改如何解决呢

以下是我中断时报错代码:

-

CFDEM中如何监测颗粒流速@chapaofan 非常感谢老师提供思路!

-

CFDEM中如何监测颗粒流速各位老师好,我最近要模拟大量细颗粒与流体在多分支管道中的流动,想通过模拟获得不同分支中的颗粒分布,目前一篇文献中提到可以通过监测分支中的颗粒流速来获得颗粒在不同分支中的分布情况( https://onepetro.org/SJ/article/28/04/1650/516504/Experimental-and-3D-Numerical-Investigation-on ), 有以下问题想请教各位老师:

1、如何在CFDEM中实现某一位置或多个位置的颗粒流速监测呢

2、不同分支中的流速是否能反映颗粒的分布呢,是否可以通过监测流速获取颗粒分布情况呢

还望老师们指教! -

CFDEM运行过程中断,MPI_ABORT was invoked on rank 9@星星星星晴 好的,我检查一下,谢谢谢谢!

-

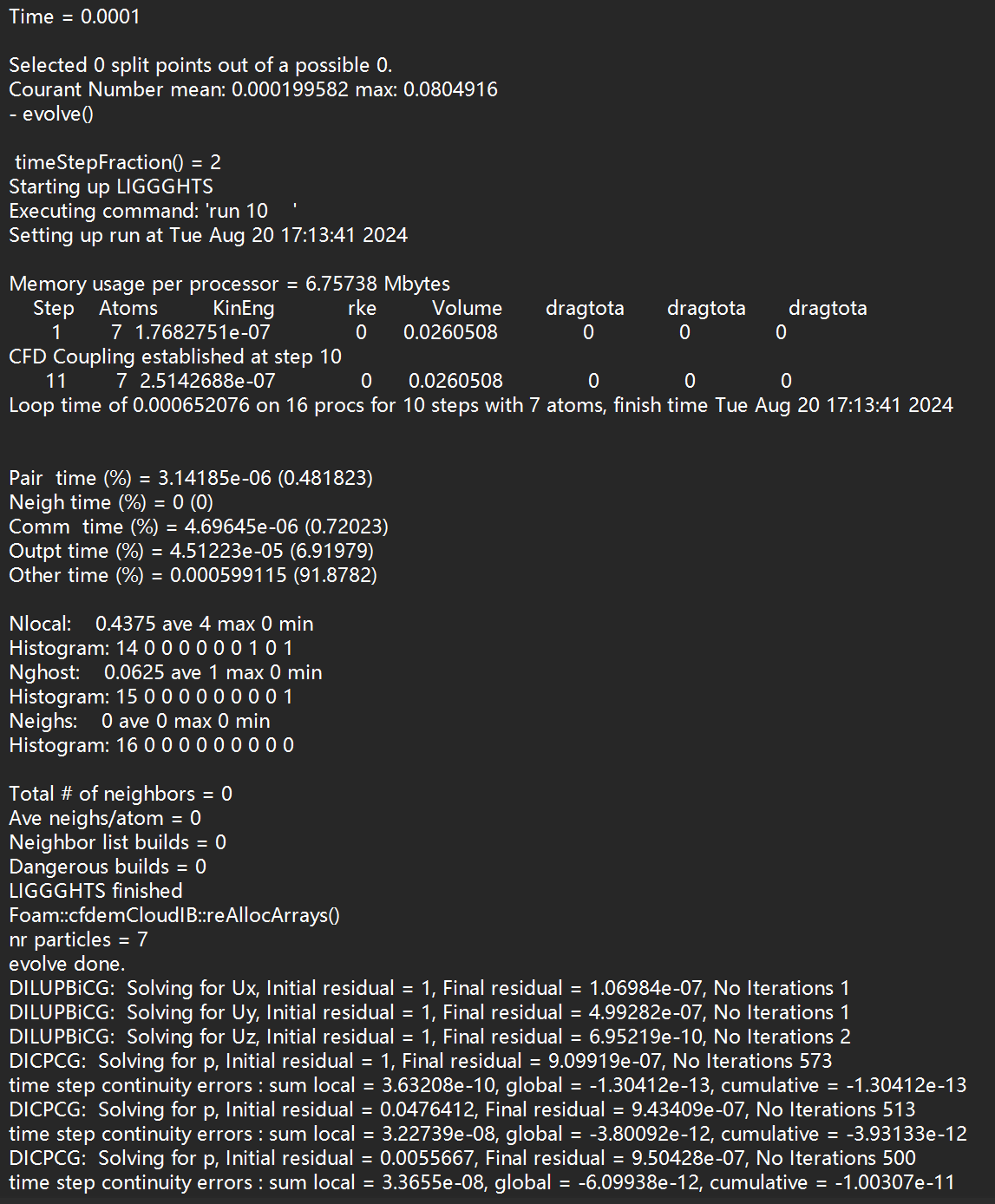

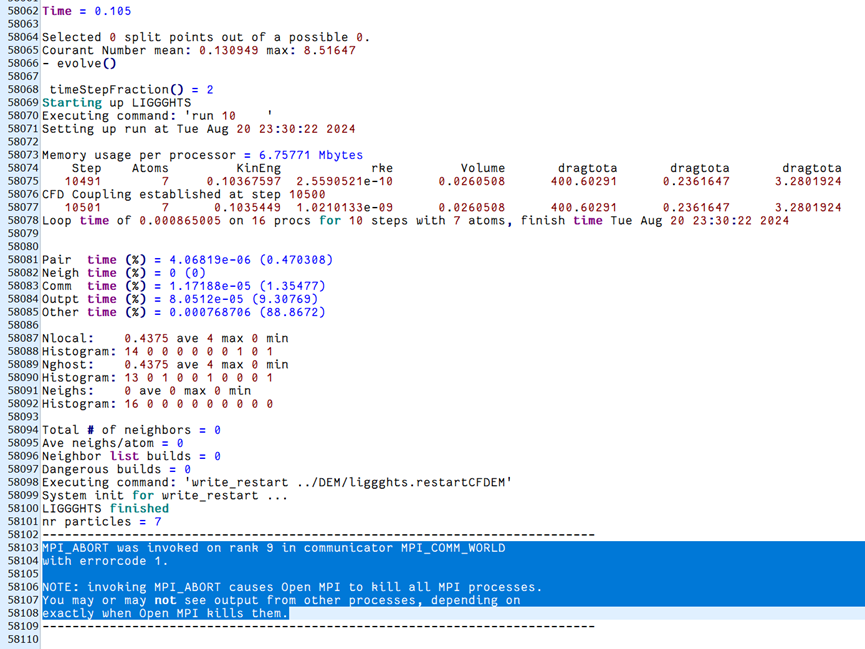

CFDEM运行过程中断,MPI_ABORT was invoked on rank 9模拟球数为6的时候是正常的,但球数增加为7之后就出现了这样一个报错

以上是球数为7正常运行情况 -

CFDEM运行过程中断,MPI_ABORT was invoked on rank 9

请问各位老师这种情况是什么原因导致的呢