mpirun并行显示运算中,但是实际log文件无输出

-

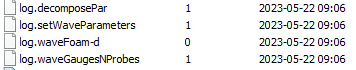

在运用集群计算过程中,单核运行算例没问题,但是提交到集群上会显示run的状态,但是log并没有数据,(log.waveFoam-d)如下图:

提交并行文件如下:

# Source tutorial run functions . $WM_PROJECT_DIR/bin/tools/RunFunctions # Set application name application="waveFoam" # Create the computational mesh echo Preparing 0 folder... rm -fr 0 cp -r 0.org 0 rm -rf constant/polyMesh cp -r constant/polyMesh.org constant/polyMesh # Create the wave probes runApplication waveGaugesNProbes # Compute the wave parameters runApplication setWaveParameters # Set the wave field runApplication setWaveField #decomosePar decomposePar > log.decomposePar mpirun -np 20 renumberMesh -overwrite -parallel > log.renumberMesh # Run the application #runApplication $application mpirun -np 20 waveFoam -parallel > log.waveFoam-d # To a post-processing analysis ln -s postProcessing/surfaceElevationAnyName surfaceElevationAnyName runApplication postProcessWaves2Foam # Reconstruct #runApplication reconstructPar rm -f /tmp/nodes.$$ rm -f /tmp/nodefile.$$想问一下,是集群账户下mpirun的路径有问题嘛?

-

@洱聿 在 mpirun并行显示运算中,但是实际log文件无输出 中说:

@洱聿 目前主动停止算例运行,会出error文件(但是之前以为是主动停止报的错误,就一直没有注意过),显示错误如下:

[manage01:228000] [[60655,0],0] tcp_peer_recv_connect_ack: received different version from [[60655,1],1]: 1.8.8 instead of 1.10.2目前集群使用的mpi版本是1.10.2,是因为mpi版本太高,所以foam extend4.0不适用嘛?有fe40专门对应的openmpi版本嘛?

您好,您的问题是否已经解决了呢?